There's a quiet standoff happening inside enterprises right now. On one side: engineering teams eager to ship AI-powered integrations using the Model Context Protocol (MCP) and real-time streaming. On the other side: security and compliance leads — people like Morgan, whose job it is to make sure nothing hits production until it plays by the rules.

Morgan isn't anti-AI. She's anti-risk. And right now, the protocols that make AI integrations powerful are the same ones that her security infrastructure was never designed to handle.

This is the streaming problem, and it's stalling AI adoption at exactly the moment enterprises need to move fast.

The Core Tension: Streaming Meets Request-Response

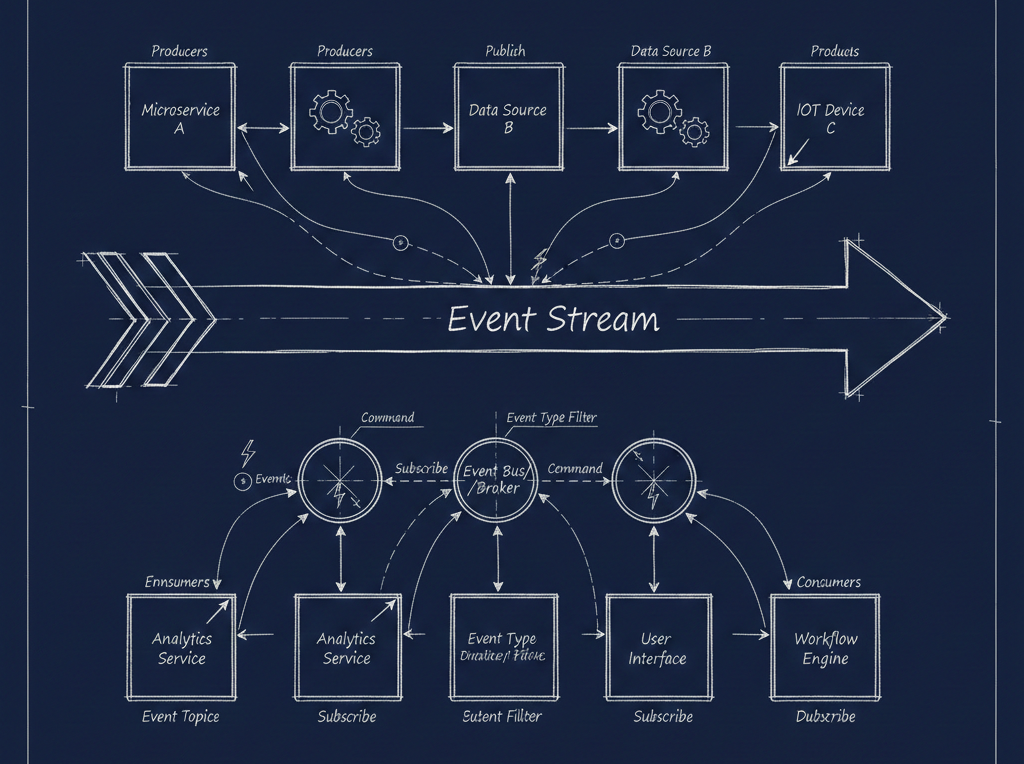

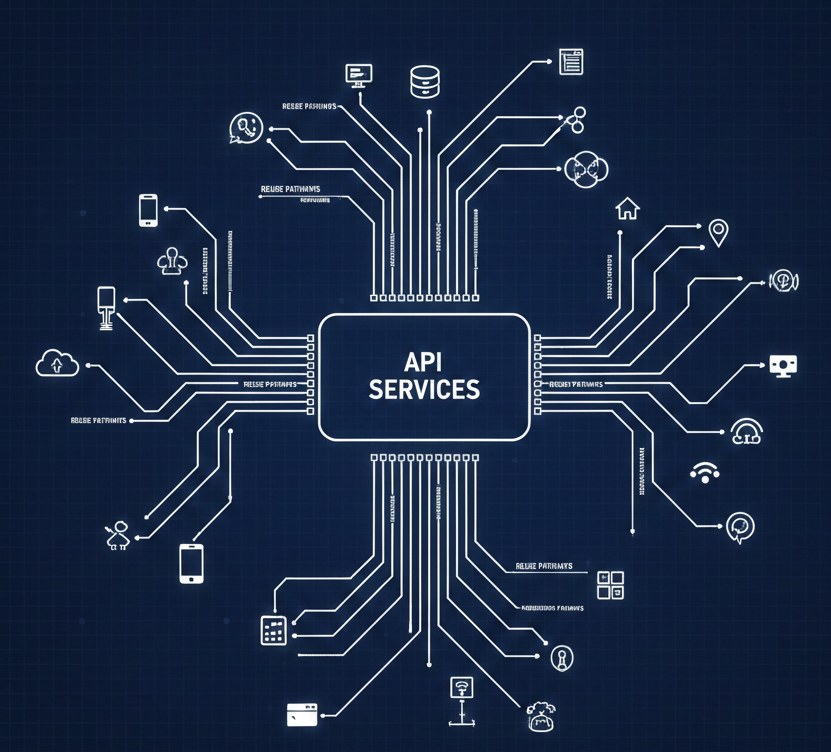

MCP and the broader ecosystem of AI services depend heavily on streaming protocols. Server-Sent Events (SSE), long-lived HTTP connections, and WebSocket-like patterns are the backbone of real-time AI capabilities — tool calling, context retrieval, live completions, agent orchestration. These aren't nice-to-haves; they're fundamental to how modern AI integrations work.

Enterprise security infrastructure, on the other hand, was built for a different era. Corporate proxies, Web Application Firewalls (WAFs), intrusion detection systems, and Data Loss Prevention (DLP) tools all assume a tidy request-response model. A client sends a request. A server sends a response. The connection closes. Security policies inspect, log, and approve traffic based on this pattern.

Streaming breaks that assumption. An SSE connection stays open. Data flows continuously from server to client. There's no clean "response" to inspect in the traditional sense. Long-lived HTTP connections look, to many security tools, like something suspicious — a slow exfiltration, a hanging connection, an anomaly worth terminating.

The result: Morgan's team flags MCP streaming traffic as non-compliant, and the integration project grinds to a halt.

The Real-World Impact

This isn't a theoretical problem. When streaming protocols collide with enterprise security policies, the consequences are concrete and costly.

Projects stall or die. AI integration initiatives that depend on real-time capabilities — think live document analysis, streaming agent responses, or interactive tool use — get blocked at the security review stage. Teams spend weeks in approval cycles, trying to explain why their traffic patterns don't fit existing policy templates.

Shadow IT flourishes. When the official path is blocked, teams find workarounds. They route traffic through personal accounts, use consumer-grade tunnels, or spin up unsanctioned infrastructure that bypasses corporate controls entirely. The security team's worst nightmare isn't a streaming connection — it's the streaming connection they can't see.

Capabilities get downgraded. To stay compliant, teams fall back to polling-based architectures or batch processing. This works, technically, but it strips out the real-time responsiveness that made the AI integration valuable in the first place. You end up with a version of the future that feels like the past.

Engineering budgets bleed. Making streaming work within enterprise security constraints is possible, but it's expensive. Custom proxy configurations, bespoke middleware layers, protocol translation services — all of this takes significant engineering effort that could be spent building actual product features.

Why This Problem Is Harder Than It Looks

It's tempting to frame this as "security teams need to get with the times," but that's both unfair and unproductive. The challenge is genuinely structural.

Enterprise security policies exist for good reasons. They protect against data exfiltration, command-and-control channels, and unauthorized data flows — all of which, at a protocol level, can look remarkably similar to legitimate streaming traffic. A long-lived connection pushing continuous data from an internal server to an external endpoint? That's either MCP doing its job or a compromised host phoning home. The security tooling often can't tell the difference.

Additionally, compliance frameworks like SOC 2, HIPAA, and FedRAMP have requirements around traffic inspection, logging, and auditability. Streaming connections make all of these harder. How do you log a response that's still in progress? How do you inspect data that flows continuously rather than in discrete packets? How do you apply DLP rules to a stream?

These aren't questions Morgan is asking to be difficult. They're questions auditors will ask, and she needs answers before anything goes to production.

Paths Forward

There's no single fix, but there are patterns emerging that bridge the gap between streaming AI protocols and enterprise security requirements.

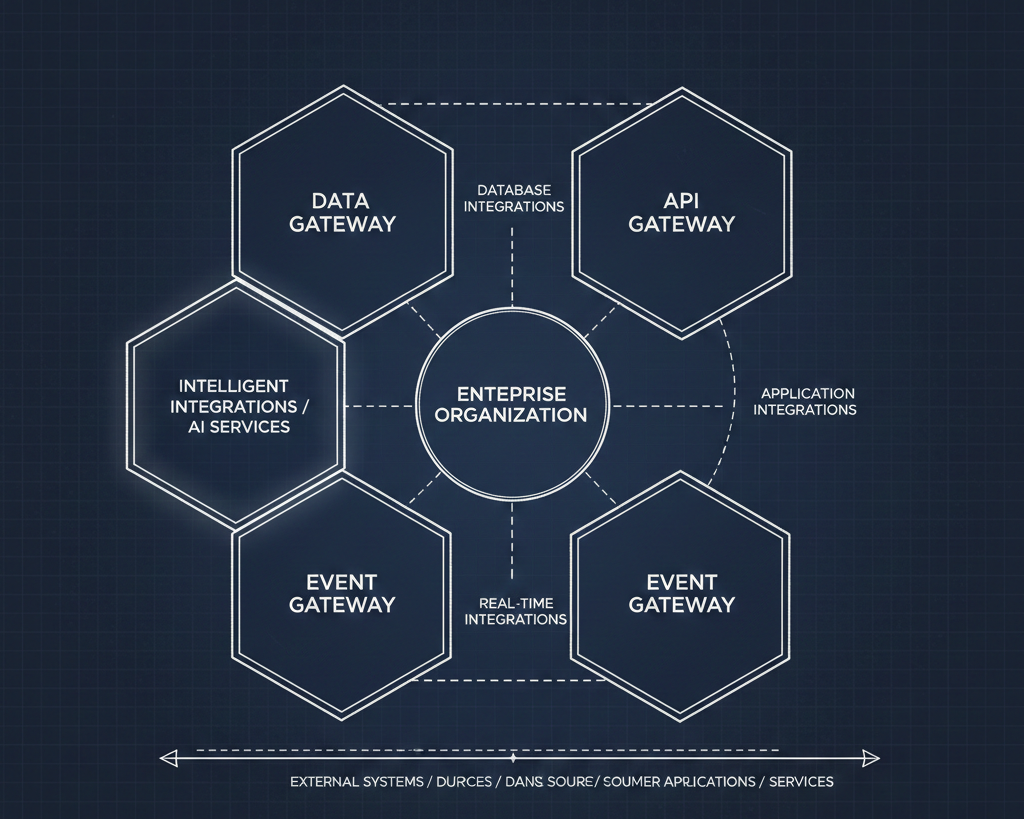

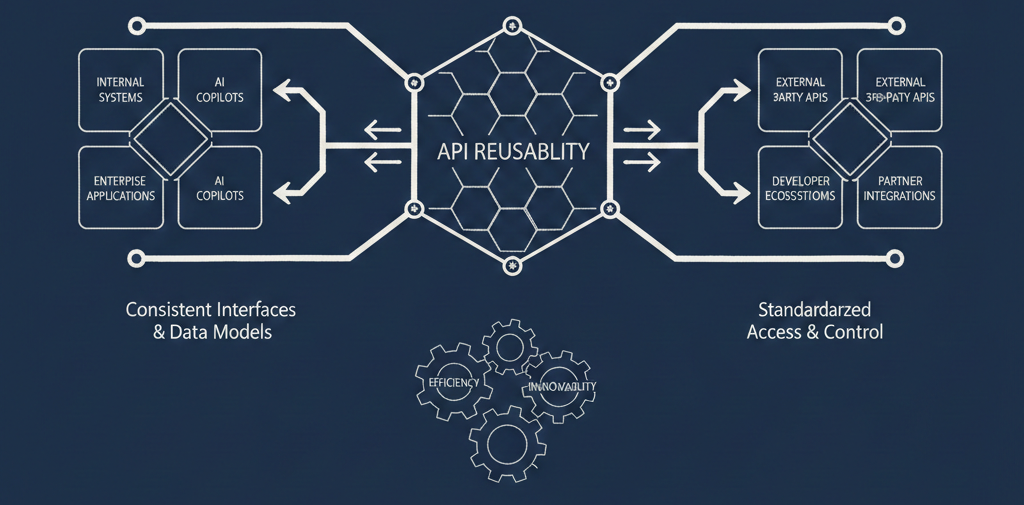

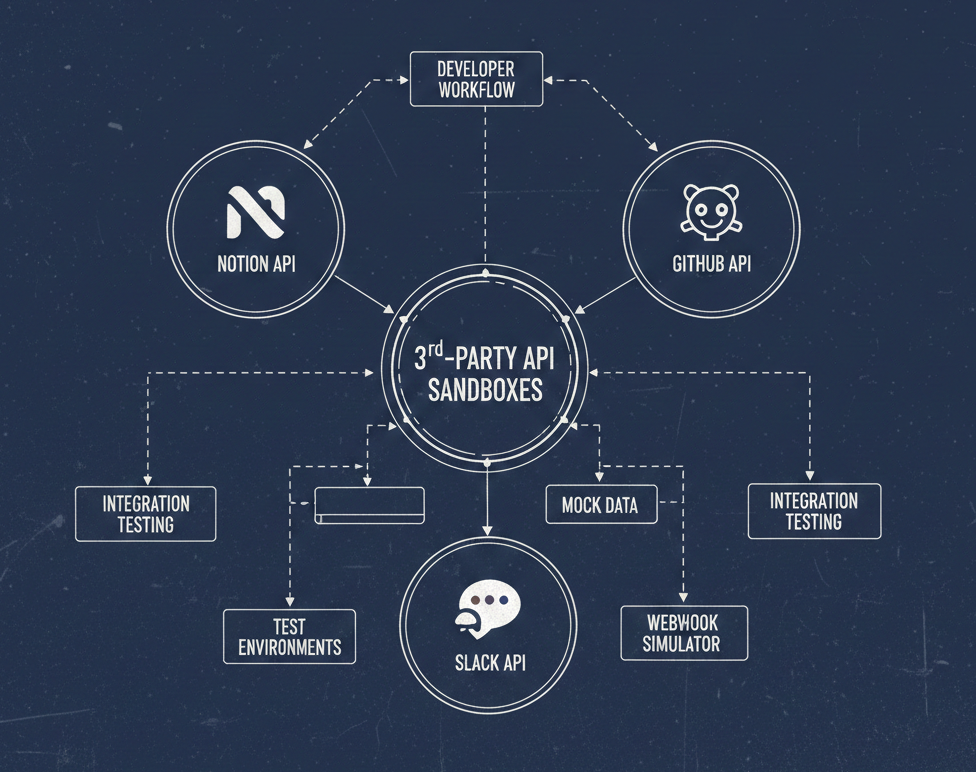

Protocol-aware proxies. A new generation of proxy and gateway solutions understand SSE and streaming HTTP natively. Rather than treating these connections as anomalies, they can inspect streaming data in-flight, apply policy rules to individual events within a stream, and maintain proper audit logs. If your organization is evaluating API gateways, streaming-awareness should be a top-tier requirement.

Chunked inspection architectures. Instead of trying to inspect a complete "response" (which doesn't exist in streaming), security infrastructure can be configured to inspect data in chunks as it flows. This requires rethinking DLP and content inspection pipelines, but it preserves both security visibility and real-time performance.

MCP transport flexibility. The MCP specification itself is evolving to better accommodate enterprise environments. Streamable HTTP transport, for instance, offers more flexibility than pure SSE — allowing implementations that can gracefully degrade to request-response patterns when streaming isn't available, while still supporting full streaming when infrastructure allows it.

Zero-trust framing. Rather than trying to make streaming "look like" traditional traffic, adopt a zero-trust model that authenticates and authorizes at the connection level. If the identity, the endpoint, and the destination are all verified and continuously validated, the transport pattern matters less. This reframes the security conversation from "what does this traffic look like?" to "who is talking to whom, and are they allowed to?"

Hybrid architectures. For organizations where full streaming simply can't pass security review in the near term, a hybrid approach — streaming within trusted network zones, with request-response bridging at security boundaries — can preserve most of the real-time benefits while staying within policy.

The Conversation That Needs to Happen

The most important step isn't technical. It's organizational. Engineering teams and security teams need a shared understanding of what streaming protocols actually are, why they matter for AI capabilities, and what "good" looks like from a security perspective.

Too often, the conversation starts with a deployment request and ends with a rejection. It should start much earlier — with security teams involved in architecture decisions, with protocol-level education for compliance stakeholders, and with a shared roadmap that treats security and AI capability as complementary goals rather than competing ones.

Morgan doesn't want to block the future. She wants to make sure the future doesn't create the next breach. Those are different things, and the organizations that figure out how to give her both — real-time AI capability and genuine security assurance — will be the ones that ship while everyone else is still stuck in review.

The gap between what AI protocols need and what enterprise security allows is one of the most underappreciated bottlenecks in AI adoption today. It won't be solved by either side giving in — it'll be solved by building infrastructure that respects both.