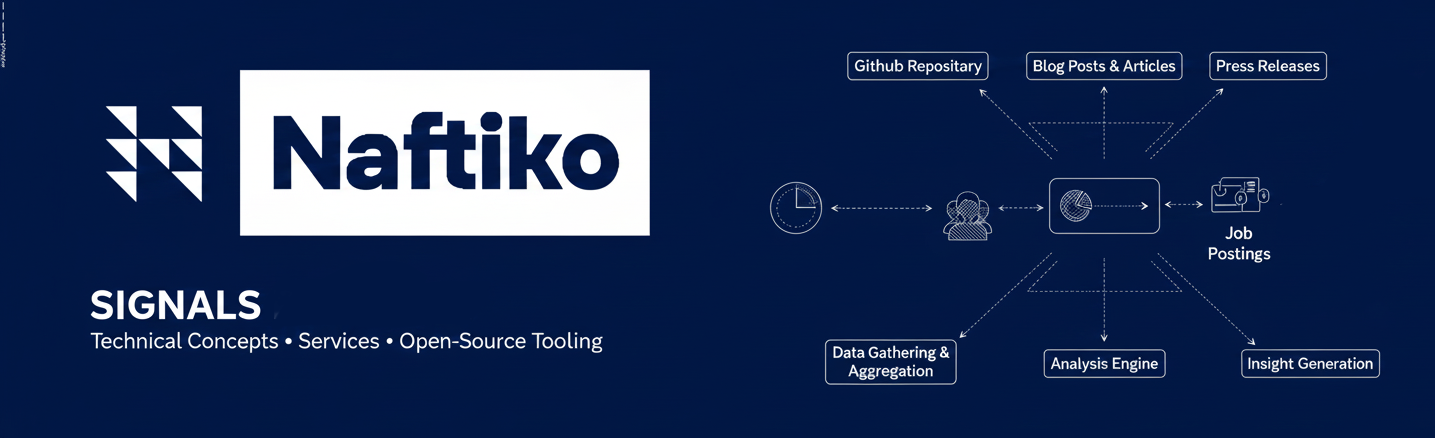

AI copilots have quickly become a board-level expectation. Once leadership sees a demo—natural language queries flowing seamlessly into internal systems—the question is no longer if but how fast. And that pressure often lands squarely on the API organization.

A Familiar Persona, A New Problem

Meet Riley, Head of APIs.

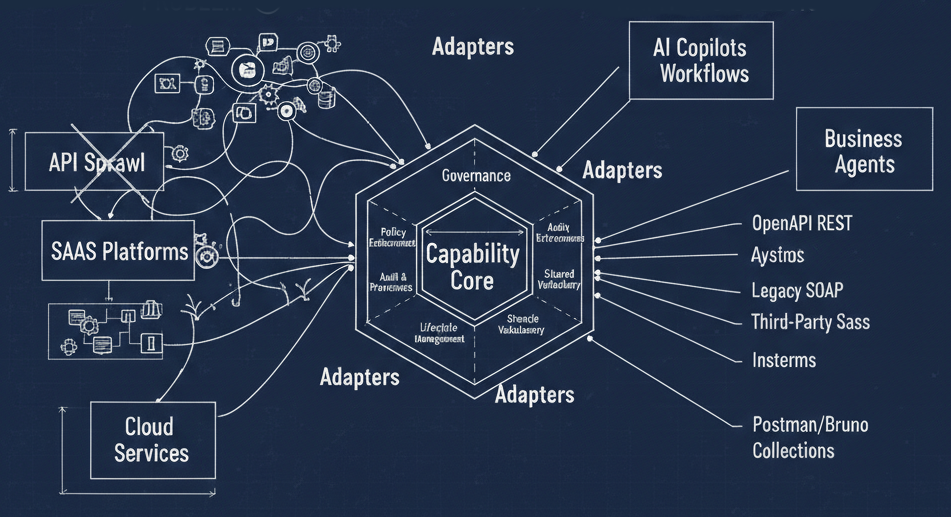

Riley’s teams have spent years maturing their API program. They are design-first. They standardize on OpenAPI. They’ve invested in governance, review processes, and shared practices that keep API sprawl MOSTLY under control—even across dozens of internally distributed and public teams.

Now leadership wants an AI copilot layered on top of those APIs.

On the surface, this seems like a natural extension of existing work. The APIs already exist. The contracts are defined. The teams are disciplined. What could go wrong?

Quite a lot, it turns out.

From OpenAPI to MCP: A New Surface Area

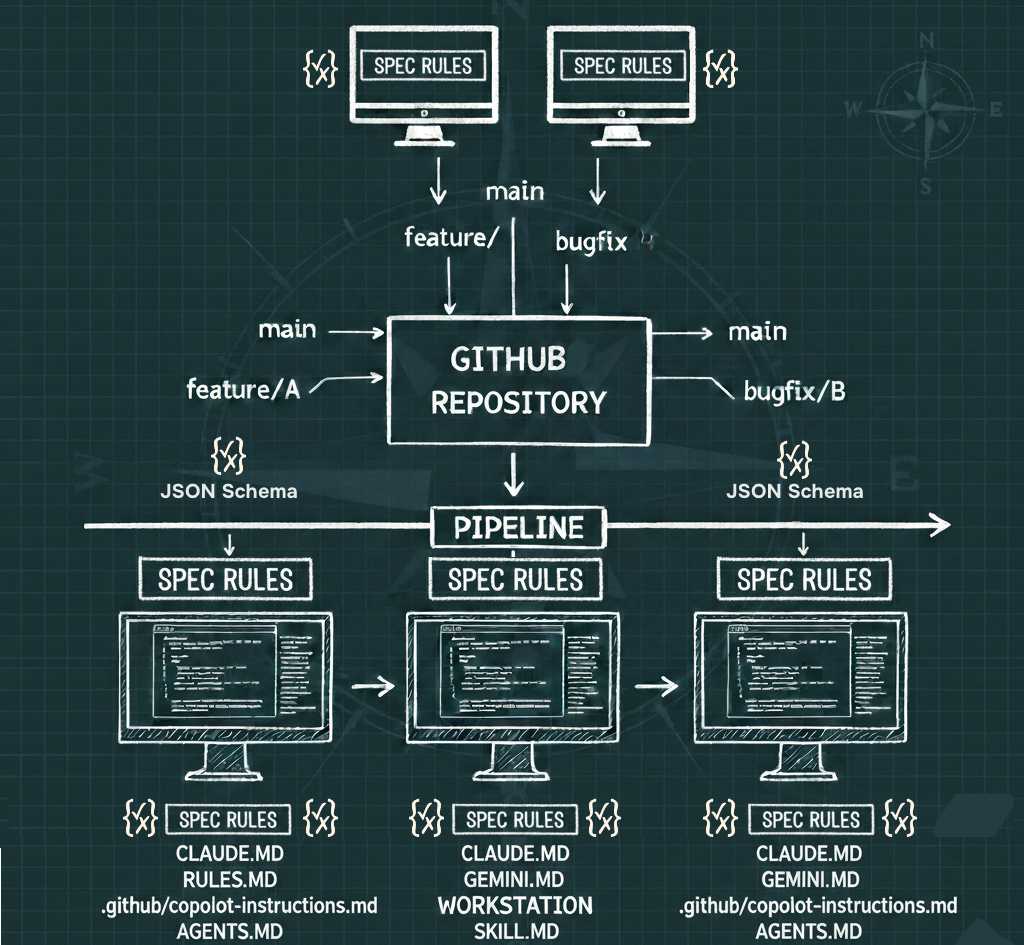

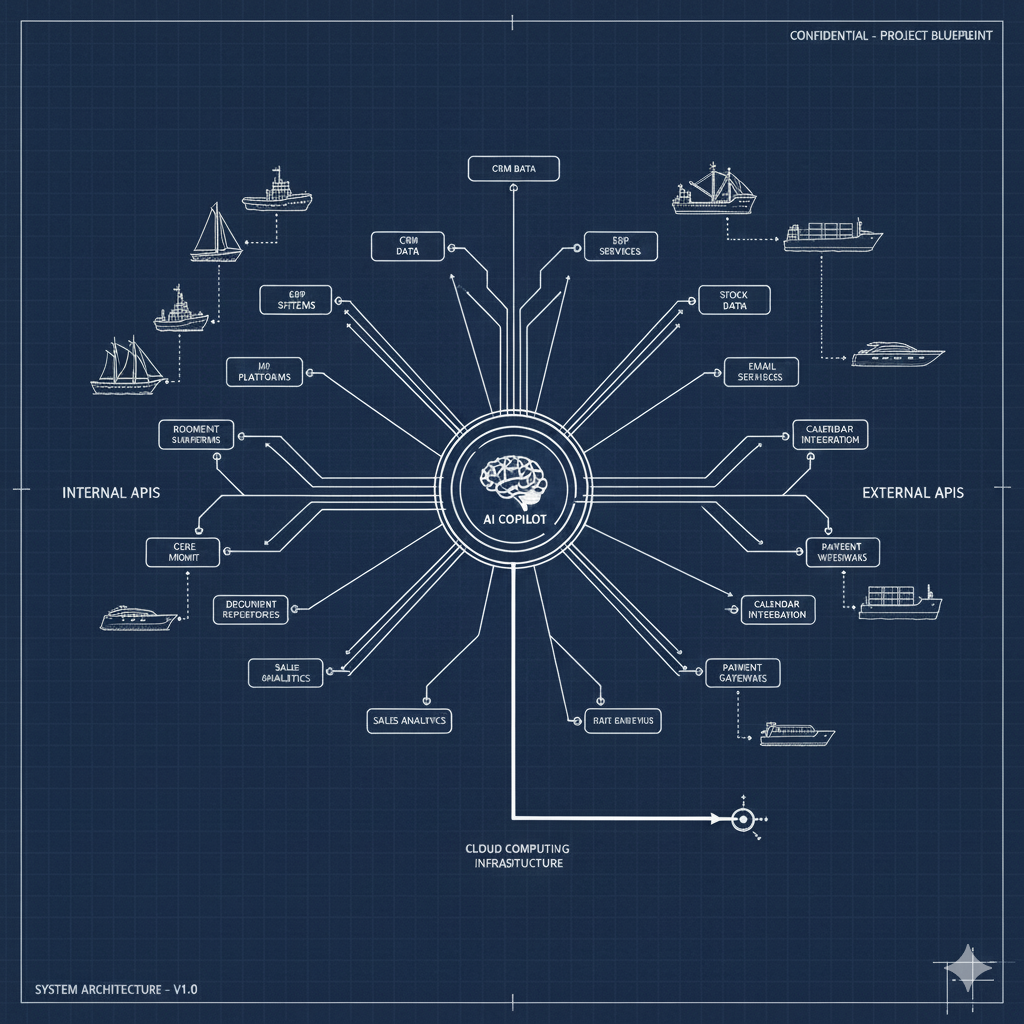

To support copilots, teams are now using OpenAPI—often with extensions—to generate MCP servers. This makes sense technically. MCP servers act as the connective tissue between LLMs and APIs, translating intent into executable calls.

But while APIs have been governed as products, MCP servers are emerging as artifacts—generated quickly, owned loosely, and deployed independently by each team.

There is no shared tooling.

No consistent guidance.

No common lifecycle model.

Every team does “what works” to get their copilot feature unblocked.

The Result: MCP Server Sprawl

What Riley starts to see looks eerily familiar—just shifted one layer up the stack.

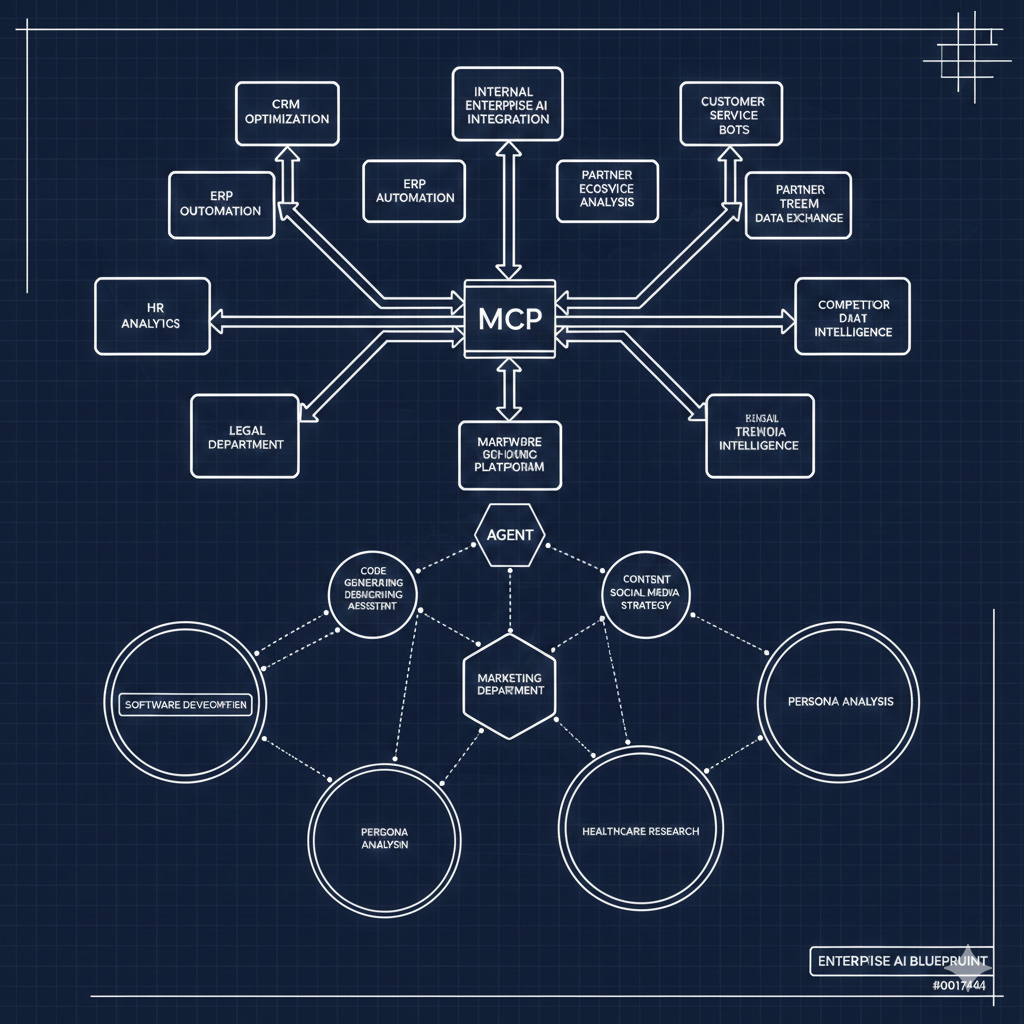

- Every API team now produces one or more MCP servers.

- Each MCP server exposes overlapping capabilities in slightly different ways.

- Naming, metadata, error handling, and permission models diverge.

- Discovery becomes tribal knowledge instead of a platform capability.

The organization has learned how to govern APIs, but MCP servers fall outside those established guardrails. They are not reviewed with the same rigor. They don’t show up in existing catalogs. They don’t clearly map back to API ownership or business domains.

The sprawl hasn’t gone away—it’s just changed shape.

Why Existing API Governance Doesn’t Transfer Cleanly

This isn’t a failure of OpenAPI or API design maturity. It’s a mismatch of abstractions.

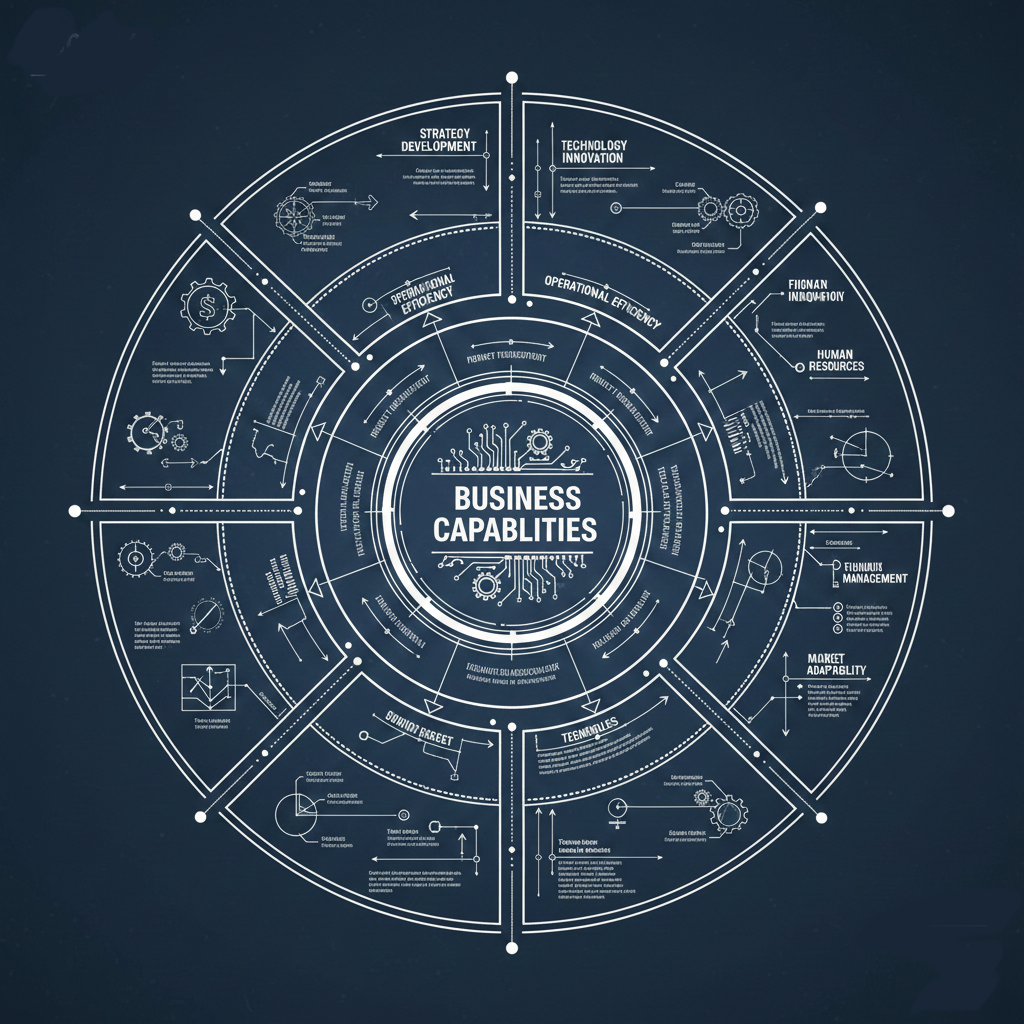

API governance evolved around:

- Stable contracts

- Explicit consumers

- Versioned lifecycles

- Human developers as primary users

MCP servers introduce:

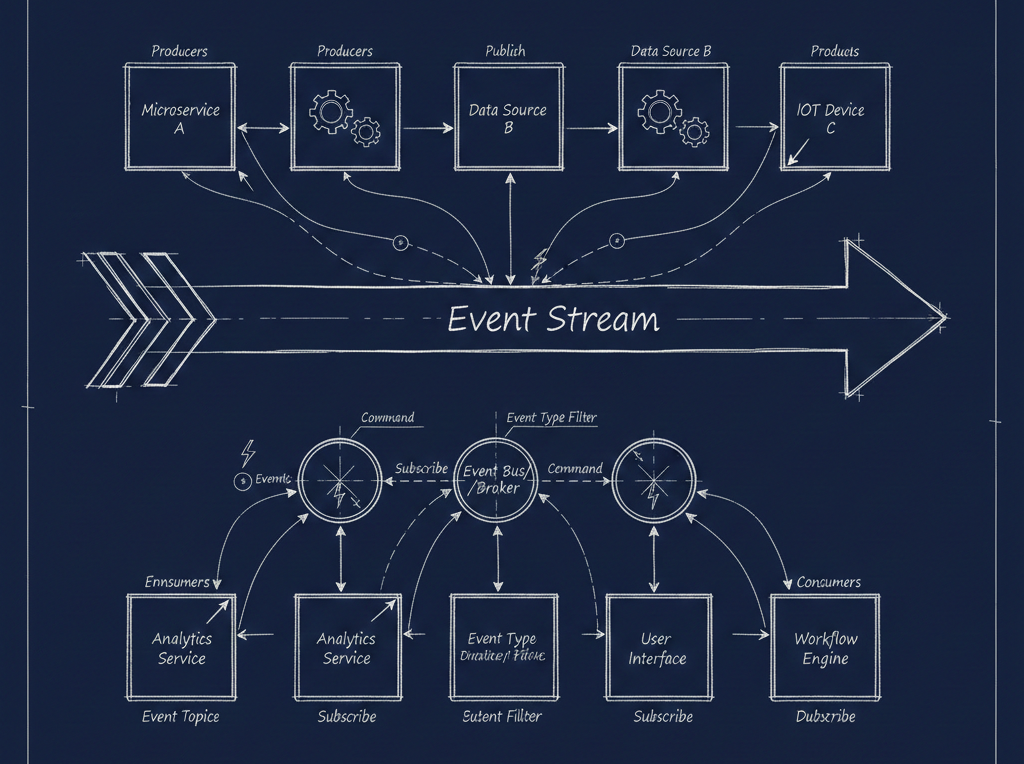

- Generated interfaces derived from APIs

- LLMs as first-class consumers

- Rapid iteration driven by prompts and behavior, not just schemas

- A new layer where “good enough” often beats “well designed”

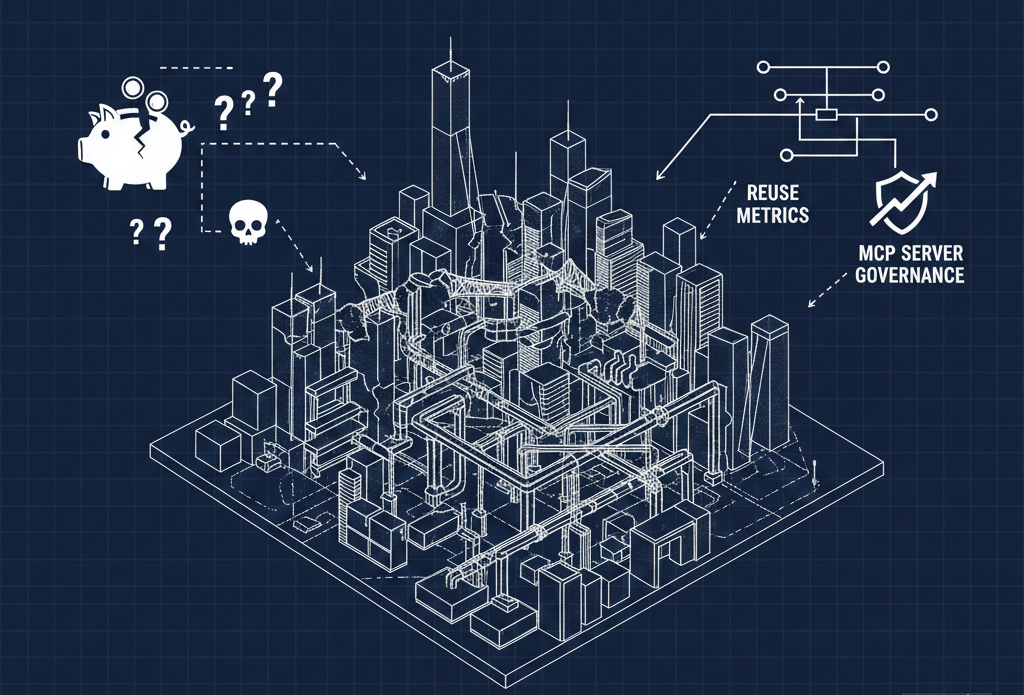

Without intentional design, MCP servers become a shadow platform—critical to AI experiences, but invisible to existing governance and platform tooling.

The Strategic Risk

In the short term, teams ship copilots. Demos succeed. Leadership is happy.

In the medium term, Riley faces a new kind of fragmentation:

- No consistency in how copilots interact with APIs

- No reusability across MCP servers

- No reliable way to discover or compose capabilities

- No clear accountability when things break or models behave unexpectedly

The organization has effectively recreated early-stage API chaos—this time optimized for AI speed instead of developer velocity.

The Core Question API Leaders Must Ask

The real problem isn’t “how do we generate MCP servers?” It’s:

How do we treat MCP servers as first-class platform assets, not disposable byproducts of AI experimentation?

Until API programs extend their design-first, governance-aware thinking into this new layer, copilots will quietly erode the very consistency and reuse those programs were built to protect.

Copilots may be the future—but without shared tooling and guidance for MCP servers, they risk becoming the next ungoverned integration surface hiding in plain sight.