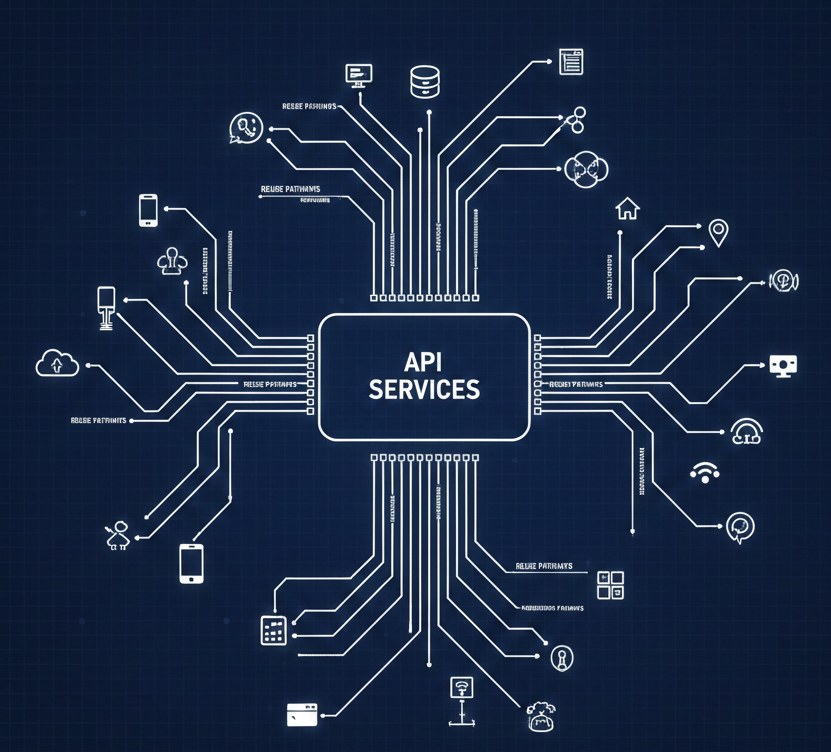

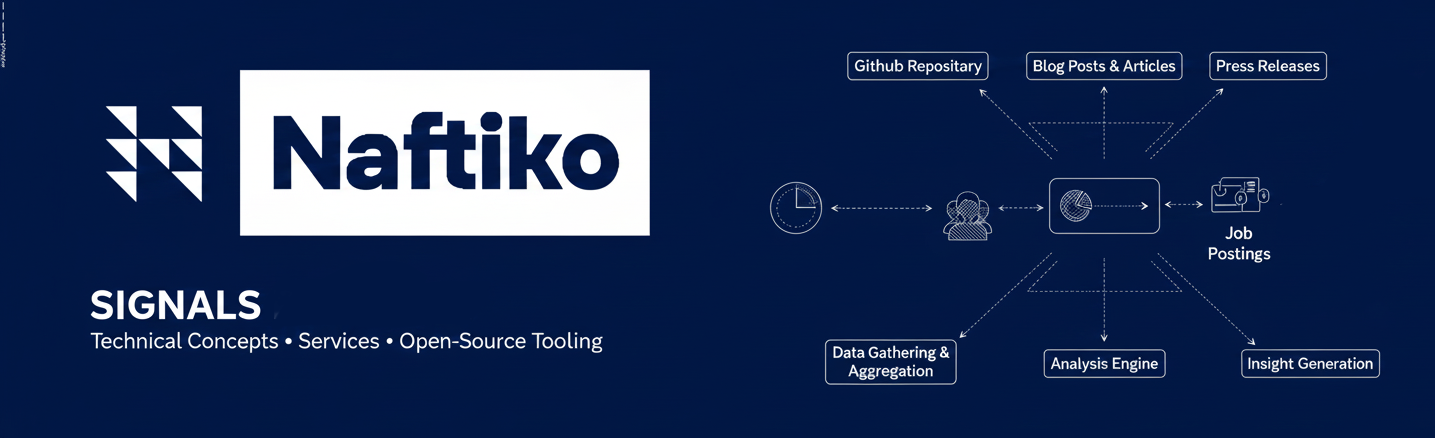

For years, hypermedia has been treated as either an academic concept or a historical artifact of early web architecture—something we nod to when talking about REST, but rarely center in modern system design. In today's episode of Naftiko Capabilities, I wanted to revisit hypermedia not as theory, but as a practical pattern that matters right now, especially as we try to integrate and automate AI across increasingly complex enterprise systems.

The Naftiko Capabilities podcast is intentionally about more than technology for technology’s sake. APIs, integrations, and platforms only matter insofar as they help organizations achieve real business outcomes. Hypermedia came up repeatedly in recent conversations because it sits at the intersection of adaptability, meaning, and action—three things that are becoming essential as AI systems begin to participate directly in our operational workflows.

Hypermedia Is About Connections, Not Endpoints

In my conversation with Mike Amundsen, we grounded hypermedia in a simple but powerful idea: the connection itself is the most important thing. Not the endpoints, not the data models on either side, but the live, descriptive connection that allows systems to interact in real time. Hypermedia enables what Alan Kay once described as extreme late binding—the ability to wait until the last responsible moment to decide how two things connect.

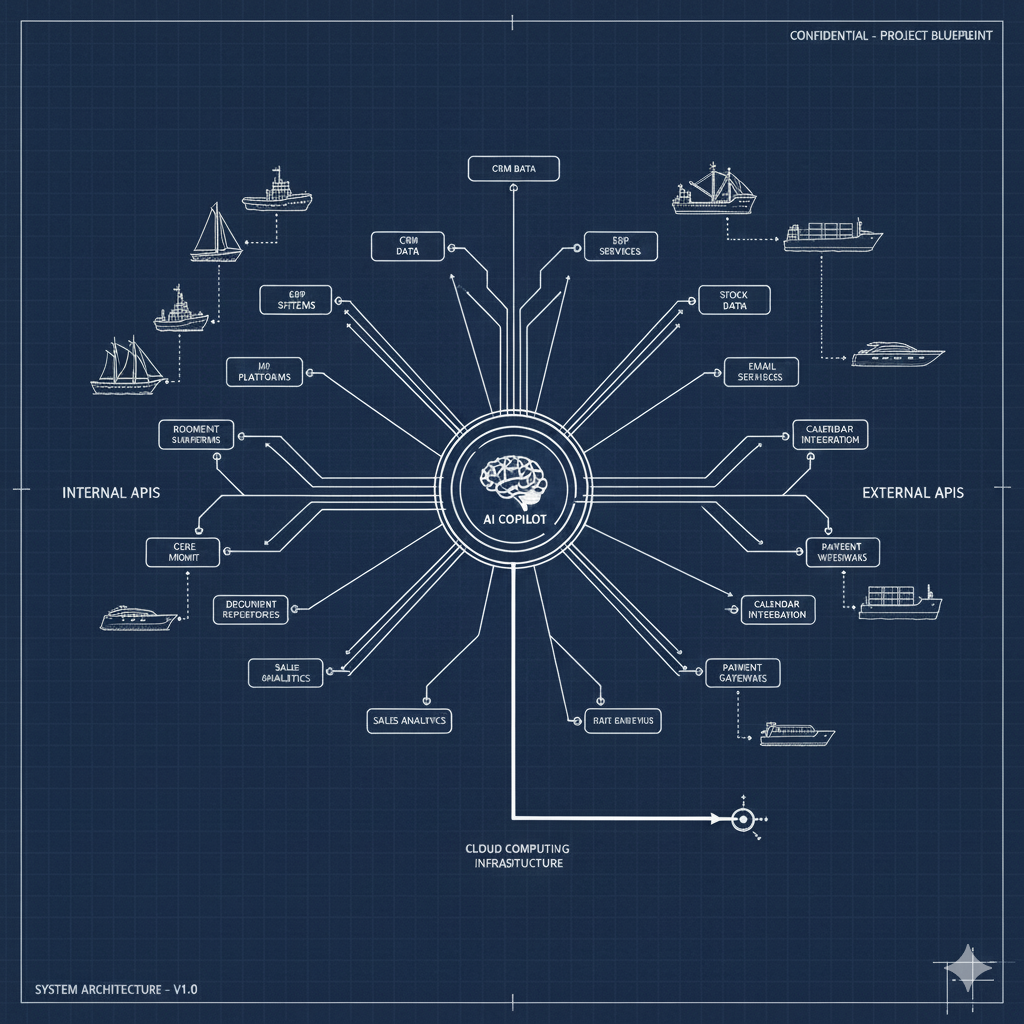

This matters because AI-driven systems are inherently dynamic. We can’t fully predict in advance which services, data, or actions will be needed. Hypermedia gives us a way to describe available actions, constraints, and expectations at runtime, using rich metadata that machines and humans can interpret together. Every connection becomes an opportunity to create something new, rather than merely execute a pre-planned integration.

Meaning, Metadata, and Information Architecture

To make those connections possible, hypermedia relies on descriptive context—media types, methods, headers, parameters, and semantics. This is where information architecture, ontology, and taxonomy enter the picture. These disciplines aren’t about documentation polish; they’re about making systems usable by people you’ll never meet, to solve problems you never anticipated.

Mike framed this as building information systems that communicate meaning as movement and coordination, not just static structure. Formats like ALPS and other semantic profiles attempt to share that meaning explicitly, enabling late-bound interaction across organizational and technical boundaries. In a world where AI agents are consuming and acting on APIs, this shared understanding becomes a prerequisite, not a nice-to-have.

Resources, State, and Action

Kevin Swiber brought a complementary perspective grounded in domain-driven design and hypermedia implementations like Siren. Hypermedia, in this framing, is about modeling the world as resources with both state and behavior. Treating resources as state machines—with defined properties and transitions—allows systems to communicate not just what is, but what can happen next.

This idea becomes especially relevant when contrasted with task- or intent-based models that dominate current AI and MCP discussions. Are we modeling behavior with structure attached, or structure with behavior attached? That philosophical tension has existed for decades, but AI is forcing us to confront it more directly. Hypermedia offers a pragmatic compromise: expose both state and possible transitions so systems—human or machine—can navigate complexity safely.

Hypermedia in the MCP and AI Context

One of the most interesting threads in the conversation was how hypermedia concepts are re-emerging inside MCP and LLM-driven interactions. Even when a system isn’t “hypermedia-native,” we can inject hypermedia ideas by explicitly describing next actions, constraints, and transitions in responses. LLMs are particularly good at interpreting this descriptive layer and presenting it meaningfully to users.

This doesn’t mean hypermedia replaces event-driven, RPC, or task-based approaches. Instead, it becomes a connective tissue that helps manage multiple mental models of the world simultaneously. As AI introduces yet another interaction paradigm, our challenge isn’t choosing a single model—it’s making them interoperable without losing meaning or control.

Hypermedia as a Business Capability

Ultimately, this episode reinforced why we’re framing these conversations around capabilities. Hypermedia isn’t just an architectural style; it’s a pattern that allows organizations to move faster without increasing risk. It supports reuse, adaptability, and AI orchestration by making systems more self-descriptive and resilient to change.

If you’re building for AI today—whether that’s context sharing, API reuse, or agent orchestration—hypermedia provides a way to align technical design with business outcomes. It helps ensure that what you build today can be understood, extended, and safely acted upon tomorrow by systems and people you haven’t even imagined yet.